Applications inevitably run on data. Whether it's a high-traffic customer portal or a microservice-powered backend, the performance and reliability of your databases directly impacts everything else. However, as systems scale and architectures evolve, so does the complexity of detecting what's wrong and where.

That's where database observability comes in—not necessarily as a replacement for traditional monitoring, but as a complementary approach that builds upon it.

Database observability refers to the ability to gain deep insights into a database's internal state and operations by analyzing external signals such as metrics, logs, and traces. Unlike basic monitoring, which primarily identifies that issues exist, observability aims to uncover why they exist, often by leveraging the data gathered through monitoring practices. Crucially, these signals (metrics, logs, and traces) don't exist in isolation; they interact with each other in complex ways within the system. True observability relies on understanding these interdependencies to effectively correlate data and pinpoint the root cause of issues. It's important to note that monitoring remains a valuable and independent practice, providing essential data that observability solutions can then utilize for deeper analysis and root cause identification.

Understand what's happening inside your database

Monitoring answers the "what." Is the CPU spiking? Are there too many connections? On the other hand, Observability answers the "why."

By collecting and correlating signals across logs, metrics, and traces, observability platforms help you understand the behavior of your database workloads, queries, and infrastructure in real time.

- Track query performance in context: Go beyond execution time and see how queries impact resource usage, locking, and downstream services.

- Surface root causes faster: Combine query stats with logs and trace data to pinpoint slowdowns, deadlocks, or contention issues.

- Visualize transaction flows: Trace how a request moves through your stack and see exactly where the latency originates—whether it's in the app, network, or database.

From slow queries to structural bottlenecks—Catch it all early

Not all issues are obvious. Some build up gradually, like a table growing too large without indexing, or a query performing fine in staging but failing in production under real-world load. Observability makes these patterns visible early on.

- Detect inefficient queries and missing indexes: Pinpoint queries performing full table scans or excessive data reads due to missing indexes, leading to slow response times and high CPU utilization.

- Analyze execution plans and locking patterns: Identify slow queries by analyzing execution plans to reveal bottlenecks and uncover deadlocks or excessive locking that cause application slowdowns and concurrency issues.

- Spot schema changes that degrade performance: Detect recent schema alterations, such as adding columns without proper indexing, that result in slower query performance and increased resource consumption.

- Identify replication lag or unexpected traffic bursts: Monitor replication status to prevent data inconsistencies due to replication lag and detect sudden traffic spikes that overwhelm the database, causing performance degradation and potential outages.

With continuous visibility, performance regressions don't go unnoticed—and troubleshooting doesn't start with guesswork.

Works across your entire database landscape

Today, data architectures are anything but uniform. Organizations often run multiple databases—relational and NoSQL, on-premises, and cloud-managed. Observability must meet you where your data lives.

Supported environments typically include:

- Relational databases: PostgreSQL, MySQL, Microsoft SQL Server, Oracle, and MariaDB

- NoSQL engines: MongoDB, Redis, and Cassandra

- Cloud-native services: Amazon RDS, Aurora, Google Cloud SQL, and Azure SQL

This breadth ensures you don't have to stitch together fragmented views or jump between tools.

Roadmap to database observability

Transitioning from basic monitoring to comprehensive database observability can seem daunting, but a phased approach can ensure success. Here's a roadmap and best practices to guide your journey:

Phase 1: Assessment and planning

- Define goals: Clearly identify what you want to achieve with observability. Are you aiming to reduce MTTR (mean time To resolution), improve application performance, or proactively identify potential issues?

- Inventory your database landscape: Document all your databases (relational, NoSQL, and cloud-managed), their versions, and their roles in your applications.

- Identify key metrics: Determine the critical metrics for

each database type. Examples include:

- Relational: CPU utilization, query latency, active connections, buffer cache hit ratio, and deadlocks.

- NoSQL: Throughput (reads/writes per second), latency, storage utilization, and node health.

- Choose the right tools: Select observability tools that support your diverse database landscape and offer the features you need (metrics, logs, traces, correlation). Consider factors like scalability, ease of use, and integration with your existing infrastructure.

Phase 2: Implementation and configuration

- Start with key databases: Begin by implementing observability on your most critical databases or those experiencing frequent issues.

- Configure data collection: Set up your chosen tools to collect the defined key metrics, logs, and traces from your databases. Ensure data is collected securely and efficiently.

- Establish baselines: Monitor your databases for a period of time to establish performance baselines. This will help you identify anomalies and deviations from normal behavior.

- Set up alerts: Configure alerts based on deviations from baselines or predefined thresholds. Focus on alerts that are actionable and meaningful.

Phase 3: Analysis and optimization

- Correlate data: Use your observability tools to correlate metrics, logs, and traces to understand the relationships between different events and identify root causes of issues.

- Analyze execution plans: Regularly examine query execution plans to identify inefficient queries and optimize them.

- Optimize indexes: Identify missing or underutilized indexes and create or modify them to improve query performance.

- Automate remediation: Where possible, automate remediation tasks based on observability data. For example, automatically scale up database resources when traffic exceeds a certain threshold.

Best practices for a successful transition:

- Start small and iterate: Don't try to implement observability across your entire database landscape at once. Start with a pilot project and gradually expand.

- Involve your team: Ensure your database administrators, developers, and operations teams are all involved in the observability implementation.

- Provide training: Train your team on how to use the observability tools and interpret the data.

- Continuously monitor and refine: Regularly review your observability setup and make adjustments as needed to ensure it continues to meet your needs.

- Focus on actionable insights: Don't just collect data for the sake of collecting data. Focus on generating actionable insights that can improve database performance and reliability.

This roadmap and these best practices will help businesses effectively transition from basic monitoring to comprehensive database observability, leading to improved database performance, reduced downtime, and faster problem resolution.

Designed for every role in the engineering stack

Database observability isn't just for DBAs. It empowers every stakeholder—from developers writing queries to SREs maintaining SLAs.

- DevOps teams can detect production issues early in the CI/CD pipeline or immediately after deployment before they impact end users, reducing the risk of widespread outages.

- DBAs can optimize queries, tune memory settings, and track slowdowns over time, proactively ensuring optimal database performance and preventing bottlenecks.

- SREs can correlate system-wide latency to database behavior, quickly identifying if the database is the root cause of application performance issues and improving overall system reliability.

- Developers can test the impact of schema or query changes with full transparency, understanding how their code affects database performance in real-time and avoiding costly mistakes in production.

The result is fewer silos, faster incident response, and more resilient systems.

Monitoring vs observability: What's the difference?

It's common to confuse the two—but observability goes deeper.

Monitoring alerts you when something is wrong, and observability tells you why it's wrong, how widespread the impact is, and what to do next. Instead of reacting to symptoms, teams can proactively uncover the source of problems—even those they weren't explicitly looking for.

- Monitoring combines predefined alerts, threshold-based, focused on known failure points.

- Observability is exploratory, real-time, and capable of diagnosing novel or unknown issues.

Database observability: A strategic investment in stability and speed

Every second of downtime or slow performance in your data layer translates to lost revenue, frustrated users, and strained engineering teams. Database observability helps you stay ahead—not by adding more noise, but by delivering actionable insight when and where it's needed.

Whether you're migrating to the cloud, scaling microservices, or handling growing data volumes, observability gives you confidence that your database infrastructure is healthy, performant, and ready to support what's next.

Database observability, powered by Site24x7

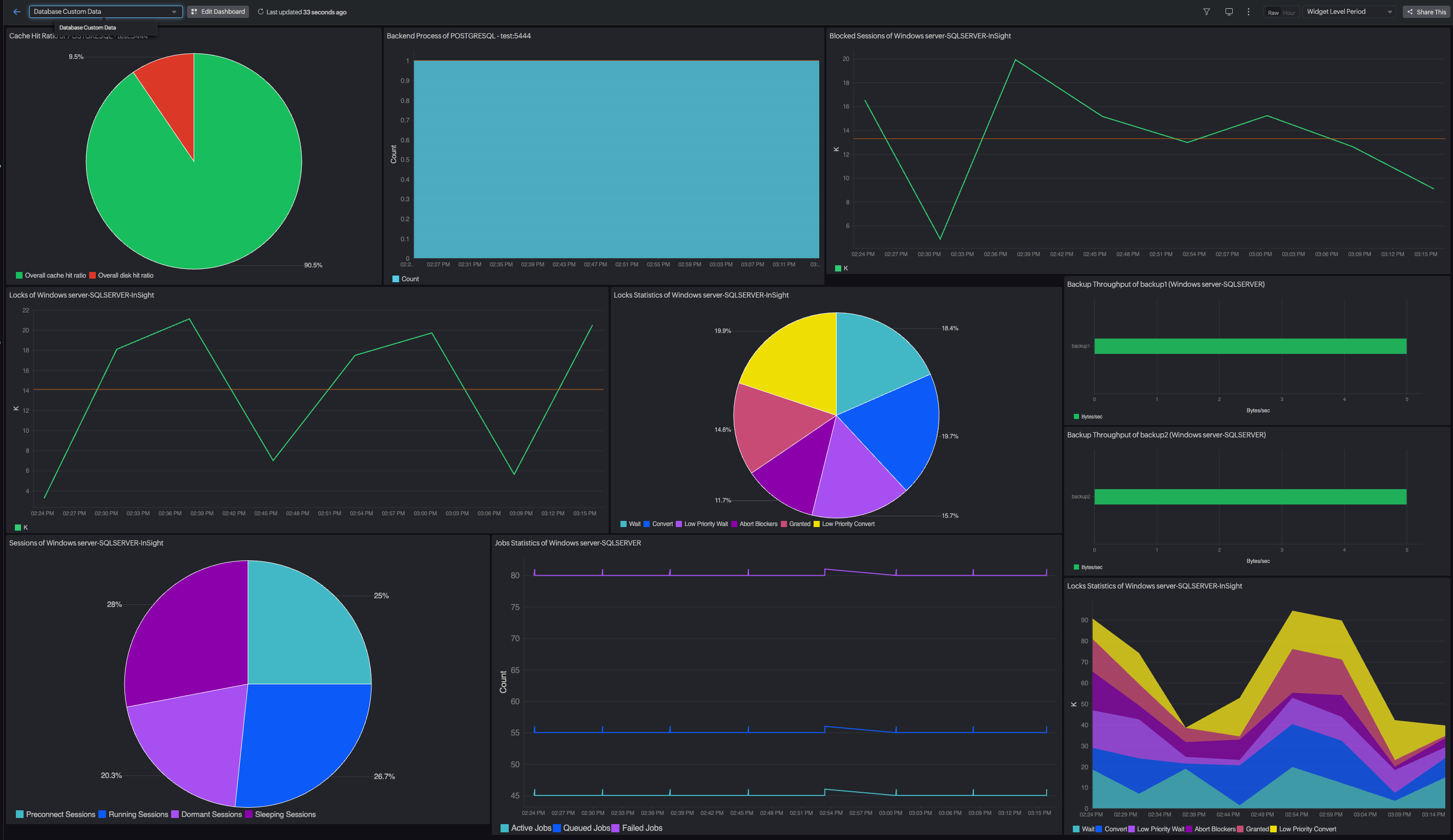

Site24x7 brings unified observability to your entire database ecosystem—across relational, NoSQL, on-prem, and cloud-native environments. Go beyond basic monitoring to get deep, actionable insights into query performance, resource usage, locking behavior, and schema efficiency.

With Site24x7, you can:

- Correlate logs, metrics, and traces to uncover the root cause of slowdowns and anomalies.

- Track slow queries across engines like MySQL, SQL Server, Oracle, PostgreSQL, and more.

- Visualize transaction flows and spot bottlenecks in real time.

- Monitor database health continuously, across hybrid and multi-cloud architectures.

- Enable collaboration by equipping developers, DBAs, and SREs with the same real-time insights.

Whether you're scaling workloads, optimizing performance, or preventing outages, Site24x7 helps you stay ahead of issues—proactively, intelligently, and at scale.